Integrating AI Features for Android App Development: Enhancing Functionality and User Experiences

Table of Contents

- Understanding the Role of AI in Android App Development

- Exploring Common User Flows with AI: Case Studies in Android Apps

- Utilizing Machine Learning Kits for Android Development: A Comprehensive Guide

- Enhancing App Functionality with TensorFlow Lite: A Quickstart Guide

- Harnessing the Power of On-Device Machine Learning for Android Apps

- Integrating Text Recognition and Face Detection Features in Android Apps

- Leveraging Low Latency AI Features for Improved User Experience in Android Apps

- Future Trends: The Impact of AI on the Evolution of Android App Development

Introduction

Artificial Intelligence (AI) has become a game-changer in the field of Android app development, revolutionizing user experiences and enhancing app functionality. From text recognition to face detection, AI-powered features have the potential to transform the way users interact with Android apps. The integration of AI in Android apps allows for personalized recommendations, real-time translations, improved security, and much more. With the rapid evolution of AI and the projected growth of the mobile app market, the importance of leveraging AI in Android app development cannot be overstated. In this article, we will explore the impact of AI on Android app development, discuss real-world case studies, and delve into future trends that will shape the evolution of Android apps. Whether you’re a developer or a business owner, understanding the role of AI in Android app development is crucial for staying competitive in today’s digital landscape

1. Understanding the Role of AI in Android App Development

Artificial Intelligence (AI) in Android app development has reshaped the field into a dynamic and adaptive environment, leading to the creation of intelligent applications. These applications, imbued with features that elevate user interactions, have demonstrated a significant impact on user engagement and retention rates.

By employing AI, Android apps can automate tasks and deliver personalized content, showcasing their adaptability and intelligence. Such apps can predict user behavior, resulting in a finely tailored and fulfilling user experience.

AI’s ability to process and analyze vast amounts of data offers developers crucial insights to enhance their apps’ functionality and performance, leading to a more streamlined and intuitive user experience.

In addition, AI has brought features like face pattern and speech recognition to the fore in mobile apps, offering enhanced interaction and user-friendliness. AI’s influence in mobile app development extends to improving security, automating tasks, and increasing efficiency.

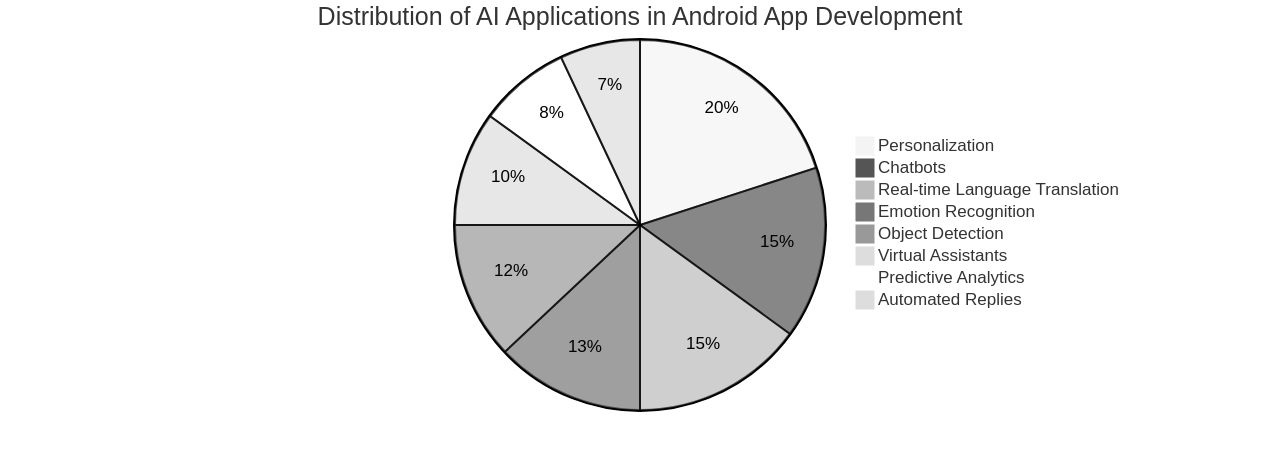

From personalization and contextual searching to chatbots, object detection, virtual assistants, predictive analytics, automated replies, real-time language translation, and emotion recognition, AI offers a diverse range of possibilities in mobile app development.

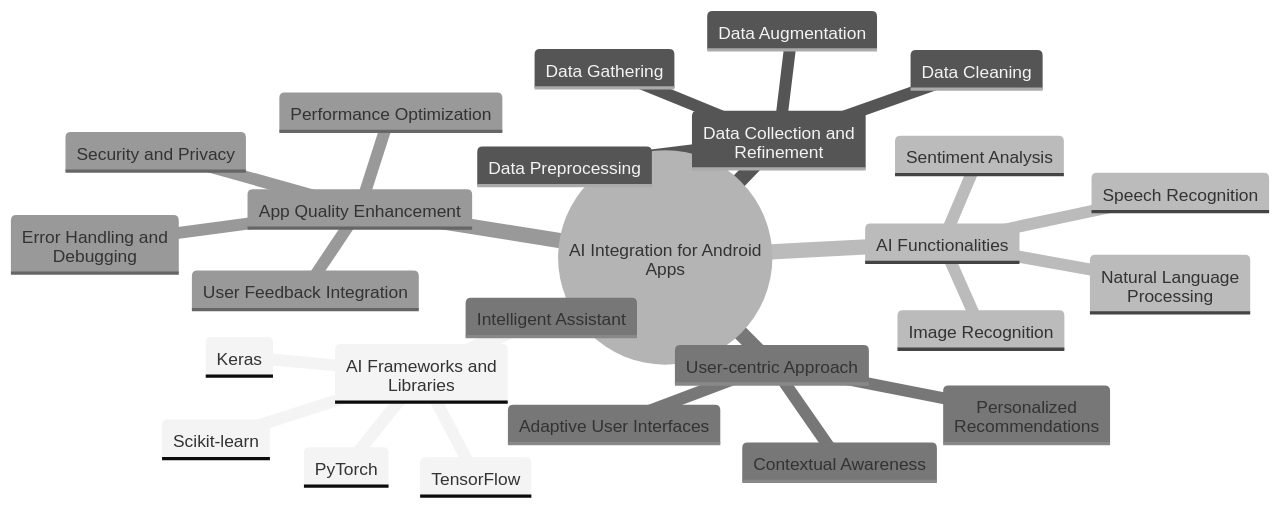

Integrating AI in mobile apps is a deliberate process that requires meticulous planning and execution.

Learn how to integrate AI into your mobile app

Developers must identify the problem they aim to solve, the data they need to collect and refine, the effectiveness metrics, and the practical changes that may be necessary.

Building an AI-powered mobile app, such as using React Native and TensorFlow for image recognition, involves several steps. They include initial setup, model training, adding labels, initializing the TFImageRecognition API class, and calling the recognize function.

Best practices in AI-powered mobile app development, such as using appropriate code editors, analyzing raw data, adopting a user-centric approach, using Python dictionaries, and utilizing low-code platforms, can enhance productivity and app quality.

Given the projected growth of the mobile app market to exceed 613 million by 2025, and the AI software market predicted to reach USD 126 billion by 2025, the potential and importance of integrating AI in Android app development cannot be overstated.

To integrate AI features in Android app development, developers can leverage various AI frameworks and libraries available for Android. These frameworks provide pre-trained models and APIs to incorporate AI functionalities into your app, enabling features such as natural language processing, image recognition, voice recognition, and sentiment analysis.

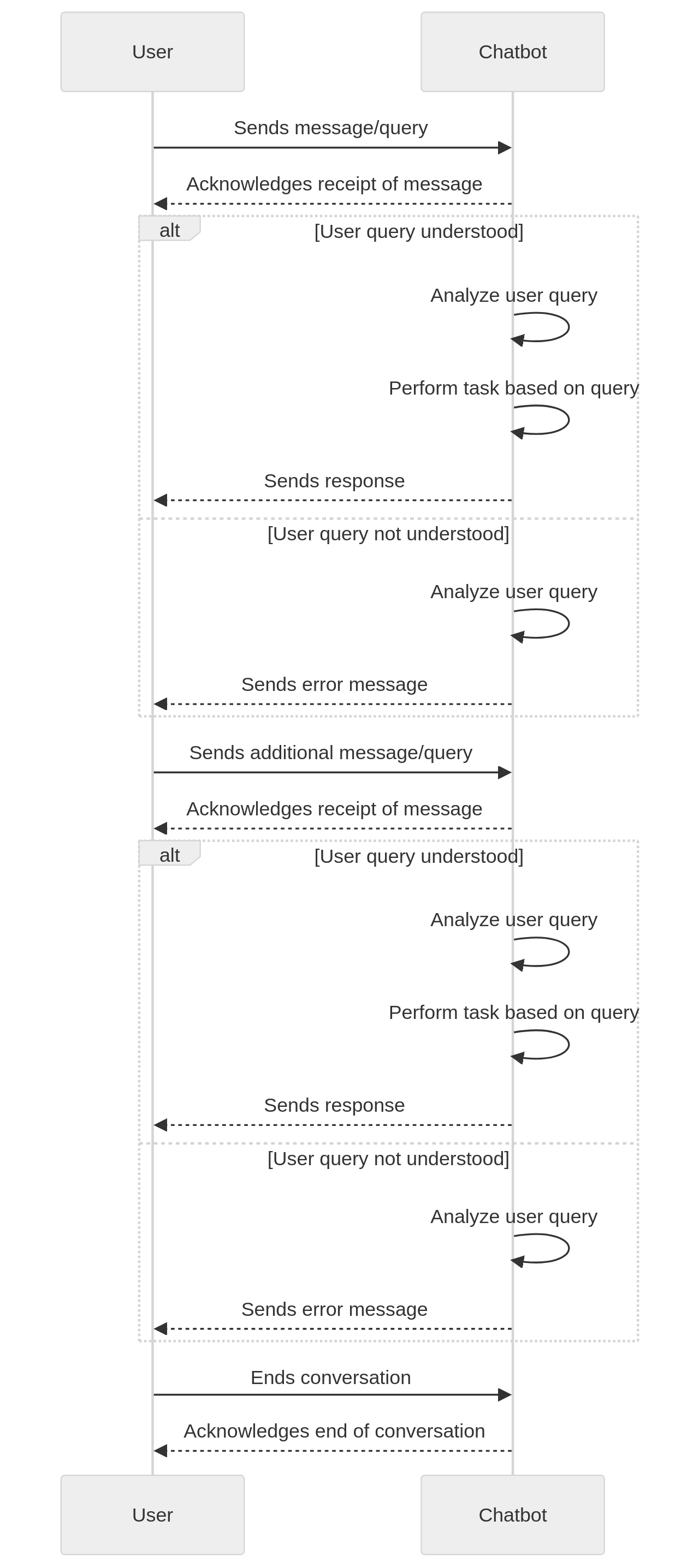

Automating tasks using AI in Android apps can be achieved using various techniques and tools. One approach could be integrating AI-powered chatbots or virtual assistants into your app, which can understand user queries and perform tasks based on the input.

Additionally, AI algorithms can analyze user behavior and preferences, automating certain tasks or providing personalized recommendations.

Predicting user behavior with AI in Android apps, developers can utilize various techniques and technologies. By analyzing user data, such as app usage patterns, preferences, and interactions, AI algorithms can be trained to make predictions about future user behavior. These predictions can aid in personalizing the user experience, recommending relevant content, and optimizing app features to increase user engagement and satisfaction.

Analyzing data with AI can significantly improve app functionality. By leveraging AI algorithms, developers can gain insights from large amounts of data generated by app usage. This can help identify patterns, trends, and user preferences, allowing for the implementation of personalized features and recommendations. AI can also assist in detecting and addressing bugs or performance issues, leading to a smoother and more efficient app experience for users.

In essence, AI’s integration in Android app development is a transformative step, offering enhanced functionality, improved user experiences, and a host of features that make apps smarter and more intuitive. The potential of AI in Android app development is vast and is set to redefine the future of the mobile app industry

2. Exploring Common User Flows with AI: Case Studies in Android Apps

The incorporation of Artificial Intelligence (AI) into Android applications has not only upgraded user experiences but also revolutionized user interactions. Observing real-world applications, we can discern how AI has effectively elevated the functionality of various applications. For example, in the e-commerce industry, AI-powered recommendation systems have drastically simplified product discovery, leading to a surge in sales.

Likewise, AI-driven personal assistants in productivity apps have streamlined task management, resulting in a significant boost in user productivity. These instances offer a glimpse into the transformative potential of AI in enhancing user interactions in Android apps.

A striking example of successful AI integration is the implementation of passkeys by Kayak, a travel search engine. Kayak introduced passkeys into its Android and web apps, enhancing both security and user experience. Passkeys, which are unique tokens stored on the user’s device, eliminate the need for passwords. This innovative approach reduced sign-in time by a substantial 50%, leading to a decrease in support tickets related to forgotten passwords. This integration was achieved using the Credential Manager API and RxJava, demonstrating the potential of AI in providing practical and efficient solutions.

AI integration in Android apps doesn’t stop at security enhancements. Headspace, a popular mindfulness app, underwent an eight-month refactoring process to improve its app architecture and add new wellness and fitness features. The refactoring involved rewriting the app in Kotlin and implementing a Model-View-ViewModel (MVVM) architecture. This clear separation of concerns improved app logic and allowed for faster implementation of features, resulting in a 15% increase in monthly active users (MAU). The focus on app excellence and improved ratings contributed to a 20% increase in paid Android subscribers, highlighting the potential of AI in driving user engagement and retention.

Incorporating AI into Android apps requires adherence to best practices to ensure optimal performance and user experience. Some of these best practices include selecting the right AI framework, optimizing for mobile devices, using cloud-based AI services, considering privacy and data security, and testing and optimization. These practices can guide the successful implementation of AI in Android apps, delivering a seamless and efficient user experience.

Several AI solutions available can streamline task management in Android apps. These solutions use machine learning algorithms to analyze user behavior and provide personalized task recommendations, automate repetitive tasks, and prioritize tasks based on their importance and deadlines. AI can also be used to create smart reminders and notifications that adapt to the user’s preferences and behavior patterns.

The case studies of Kayak and Headspace underscore the transformative power of AI in Android app development. By integrating AI into their platforms, these companies have not only improved user experience but also significantly boosted their business performance. These examples serve as a testament to the potential of AI in revolutionizing user interactions in Android apps

3. Utilizing Machine Learning Kits for Android Development: A Comprehensive Guide

Machine Learning (ML) integration in Android applications is now a straightforward process thanks to the advent of ML kits. These packages, such as ML Kit for Firebase, Google’s TensorFlow Lite, and PyTorch Mobile, come with ready-to-use APIs that address common mobile use cases like text recognition, face detection, barcode scanning, image labeling, and object detection and tracking. These kits are a boon for developers as they simplify the implementation of complex AI capabilities, including image recognition, natural language processing, and predictive analytics, into Android apps.

These ML kits are appreciated for their flexibility and adaptability. They give developers the choice to deploy either on-device or cloud-based APIs. On-device APIs provide quick data processing and the capacity to function offline, while cloud-based APIs, which use Google Cloud’s machine learning technology, deliver higher accuracy.

Another advantage of these ML kits is their ability to deploy custom TensorFlow Lite models to ML Kit via Firebase. This drastically cuts down the time and resources developers would otherwise spend building AI models from scratch, allowing them to concentrate on developing unique app features and enhancing user experiences. The process of using ML Kit involves integrating the SDK, preparing input data, applying the ML model to generate insights, and using these insights to power features in the app.

ML’s role in Android app development also comprises various tools and workflows for writing, debugging, building, and testing Android apps. Libraries like Android Platform, Jetpack, Compose, and Google Play Services augment functionality for app development. Additionally, ML capabilities can be incorporated into Android apps to process images, sound, and text without sending user data to the cloud, enabling offline functionality and reducing costs.

From a developer’s perspective, the Android platform is constantly progressing with updates, releases, and advancements in machine learning, gaming, privacy, and 5G. Android for Enterprise focuses on security and provides features for enterprise app development. Android Studio is the recommended IDE for Android development, with numerous resources available for learning, including guides, reference documentation, samples, and libraries on GitHub. Android Studio, NDK, and bug reporting tools serve as development support, while Google Play, Firebase, and Google Cloud Platform offer additional services and integration options.

In essence, ML kits present a practical solution for incorporating advanced AI functionalities into Android apps. By leveraging these kits, developers can dedicate their resources to enhancing user experiences and creating unique app features, thereby maximizing the potential of their Android apps

4. Enhancing App Functionality with TensorFlow Lite: A Quickstart Guide

TensorFlow Lite is a robust tool that aids in integrating AI capabilities into Android applications. It is a lightweight framework that empowers developers to run machine learning models on mobile devices, promoting on-device inference and reducing latency.

The foundation of TensorFlow Lite is rooted in its open-source machine learning library, TensorFlow.js, which is purpose-built for JavaScript. TensorFlow.js is a critical tool for machine learning on mobile and edge devices. However, TensorFlow Lite comes to the forefront when it comes to deployment on these devices.

The TensorFlow ecosystem offers several versions, including v2.1.4.0, each catering to diverse use cases. TensorFlow.js and TensorFlow Lite come equipped with their individual resources, models, datasets, and pre-trained models, offering developers a well-rounded toolkit for their machine learning requirements.

The TensorFlow platform also expands its offerings beyond tools and libraries. It introduces a certificate program for those wanting to showcase their proficiency in machine learning. In addition, TensorFlow provides a plethora of educational resources for learning the basics of machine learning and responsible AI resources and tools for incorporating ethical AI practices into the machine learning workflow.

TensorFlow Lite’s capabilities extend beyond just running machine learning models. It also offers prebuilt and customisable execution environments, making it a versatile tool for Android development. However, it is essential to understand that TensorFlow Lite models differ from TensorFlow models in format and are not interchangeable. These models necessitate a specific runtime environment and data format, known as a tensor.

TensorFlow Lite’s strength extends beyond merely running inferences with existing models. It also offers the flexibility to create custom runtime environments and even server-based model execution. This opens up advanced development avenues for those wanting to push the limits of what’s possible with TensorFlow Lite.

In the context of Android app development, TensorFlow Lite excels with its ability to integrate prebuilt models or convert TensorFlow models. The process involves transforming input data into tensors with the correct shape, running an inference using a runtime environment, a model, and input data. The models then produce prediction results as tensors, which are processed by the app.

The integration of TensorFlow Lite into Android apps is not only potent but also efficient. It uses hardware acceleration for faster model processing, leading to a smooth user experience. This guide serves as an initial step towards unlocking the potential of TensorFlow Lite for Android app development. It offers a practical approach to enhancing app functionality with TensorFlow Lite, including features such as image classification, object detection, and speech recognition.

The journey with TensorFlow Lite continues. For those wanting to dive deeper, there are countless resources, tutorials, examples, and support available for TensorFlow Lite development. With TensorFlow Lite, the possibilities for Android app development are virtually limitless.

To further optimize the performance of TensorFlow Lite for mobile devices, several strategies can be applied.

Optimize TensorFlow Lite for your mobile app

These include quantization, which reduces memory usage and improves inference speed by converting the weights and activations of the model to lower precision formats. Model optimization techniques like model pruning and weight sharing can reduce the size and complexity of the model, leading to faster inference times. Hardware acceleration features on mobile devices can greatly speed up inference. Operator fusion combines multiple operations into a single fused operation, reducing memory access and improving cache utilization, resulting in faster inference. Input/output optimization can minimize data transfer overhead and improve inference speed. By applying these optimization techniques, TensorFlow Lite can be effectively optimized for mobile devices, allowing for faster and more efficient machine learning inference

5. Harnessing the Power of On-Device Machine Learning for Android Apps

Leveraging machine learning directly on Android devices significantly amplifies the capabilities of Android applications. This innovative technology enables swift data processing and instantaneous responses, eradicating the need for data transmission to a server. The resulting benefits are two-fold: users enjoy enhanced experiences due to increased speed and responsiveness, and data security is fortified as information remains confined within the user’s device.

Android 11 has ushered in substantial updates to on-device machine learning, including the introduction of the ML Model Binding Plugin and an updated ML Kit. The ML Model Binding Plugin notably streamlines the integration of custom TensorFlow Lite models into Android applications, eliminating the need for manual conversion to byte arrays.

This plugin also simplifies leveraging GPUs and the Neural Network API for acceleration, further augmenting the speed and efficiency of applications. The updated ML Kit now accommodates custom models, enabling developers to implement TensorFlow Lite models for image classification and object detection.

Furthermore, with Android 11, developers gain access to pre-trained models and resources such as TensorFlow Hub and TensorFlow Lite Model Maker, simplifying the process of constructing and discovering models ideal for their applications.

In the modern Android development landscape, the emphasis lies on utilizing a declarative approach to UI and Kotlin’s simplicity to develop applications with less code. Android Studio, the recommended IDE for Android development, provides features for writing and debugging code, building projects, testing app performance, and using command line tools.

In addition, libraries such as Android platform, Jetpack, Compose, and Google Play services can be utilized to further amplify the functionality of Android applications. With the integration of machine learning, developers can incorporate features to process images, sound, and text in their apps.

The ML Kit provides ready-to-use solutions to common problems and doesn’t necessitate ML expertise. Custom ML models can be deployed using Android’s custom ML stack built on TensorFlow Lite and Google Play services. TensorFlow Lite can be employed for high-performance ML inference in apps, and hardware acceleration can be harnessed for optimized performance.

Google Play services provide access to the TensorFlow Lite runtime and delegates for hardware acceleration. Code samples and resources are available for developers to learn and implement ML features in their apps. This way, on-device machine learning not only augments the functionality of Android apps but also boosts their responsiveness and security.

To further enhance user experiences with on-device machine learning in Android apps, developers can harness platforms like AppsGeyser. Integrating machine learning models directly into the app allows developers to offer personalized and context-aware experiences to users, including features such as smart suggestions, predictive text input, real-time translation, and image recognition. Processing data locally on the device allows apps to deliver faster response times and enhanced privacy, as sensitive data doesn’t need to be sent to external servers. Furthermore, on-device machine learning can function offline, ensuring a seamless experience even without an internet connection.

To boost data privacy with on-device machine learning in Android apps, developers can implement security measures such as encryption and data anonymization techniques. This ensures the protection of sensitive user information and prevents unauthorized access. Developers can also incorporate privacy settings within the app, granting users control over the data they share and the permissions they grant to the app. By adopting these practices, Android app developers can prioritize user privacy and ensure their apps are secure

6. Integrating Text Recognition and Face Detection Features in Android Apps

Artificial intelligence (AI) offers a wealth of features that can considerably enhance the functionality of Android applications. Among these are text recognition and face detection, each bringing a unique set of benefits. Text recognition, for instance, broadens the scope of possibilities, enabling the scanning and translation of text, or the extraction of information from documents. Face detection, on the other hand, can be utilized to bolster security via biometric authentication or to introduce playful elements in photo editing applications.

Google’s ML Kit and Firebase ML play a pivotal role in actualizing these features. With ML Kit, developers can identify text in images or videos. The text recognition library, “com.google.android.gms.play-services-mlkit-text-recognition”, can be leveraged in one of two ways: as an unbundled library or a bundled library. The unbundled library permits dynamic downloading of the model via Google Play Services, whereas the bundled library statically links the model to the app during build time. However, opting for the bundled library increases the app size by roughly 260KB for the library and approximately 4MB per script architecture.

The text recognition operation yields a text object that encompasses the recognized text and its structure. This text is further segmented into text blocks, lines, elements, and symbols, each presenting specific information such as recognized text, bounding coordinates, rotation, and confidence score. To ensure precise text recognition, it is recommended that the input images feature characters that are a minimum of 16×16 pixels in size.

In parallel, Firebase ML offers tools for identifying text in images. By utilizing the Firebase ML Vision SDK, developers can recognize text in images such as street signs or documents. The outcome of the text recognition operation is a FirebaseVisionText object, which includes the recognized text and its structure. This recognized text is arranged into blocks, paragraphs, lines, and elements, and each of these objects contains the recognized text and its bounding coordinates.

To integrate text recognition functionality into Android apps, developers can refer to a comprehensive, step-by-step guide. This guide provides detailed instructions on how to implement this functionality, enabling the use of text recognition features to extract text from images or documents. By integrating these AI features into their applications, developers can create Android apps that are not only more versatile and interactive but also more user-friendly

7. Leveraging Low Latency AI Features for Improved User Experience in Android Apps

The essence of a robust, AI-powered application lies in its ability to provide a seamless, immediate response to user actions. This is a critical factor in applications that heavily rely on real-time AI functionalities such as voice assistants, real-time translators, or gaming apps. An impressive case in point is the collaborative AI startup, Minerva CQ, that uses technologies from Amazon Web Services (AWS) and NVIDIA to revolutionize contact center support. By combining these technologies, Minerva CQ has managed to provide real-time AI-powered guidance and insights to contact center agents, leading to a significant decrease in customer service call times.

Minerva CQ utilizes Amazon Elastic Compute Cloud (EC2) P4d instances powered by NVIDIA A100 Tensor Core GPUs to ensure the necessary computational power for running its AI solution. This strategic choice of technologies has resulted in a 20-40% reduction in average call handling time in contact centers, a testament to the power of low latency AI features. The company plans to leverage more AI capabilities to further evolve its CQ solution and improve its efficiency.

In the same vein, Zappos, a leading online apparel retailer, has utilized analytics and machine learning on AWS to enhance its customer experience. Aiming to provide personalized recommendations and increase search relevance, Zappos has leveraged AWS services such as Amazon Kinesis Data Firehose, Amazon Redshift, and Amazon EMR, to process and analyze data and run machine learning models. This has led to faster search results, improved personalized sizing recommendations, reduced product returns, and higher search-to-clickthrough rates.

Zappos uses infrastructure as code with AWS CloudFormation for easy scalability and deployment, proving that low latency AI features can indeed transform the user experience and make it more responsive and efficient. These real-world examples underline the critical role of low latency in enhancing the functionality of AI-powered apps, making them more efficient and user-friendly

8. Future Trends: The Impact of AI on the Evolution of Android App Development

As we delve deeper into the digital age, Artificial Intelligence (AI) has emerged from the shadows, proving to be more than just a fleeting trend. It’s an undeniable game-changer in Android app development, paving the way to a future teeming with possibilities. The rapid evolution of AI has led to the creation of tools that amplify app functionality and enrich user experiences.

The influence of AI in mobile applications is evident, with tech behemoths like Facebook, Microsoft, Google, and IBM spearheading this revolution. Gartner’s report of a 300% increase in AI integration from 2018 to 2019 underpins this surge.

AI has found a significant application in chatbots, revolutionizing the way businesses interact with their customers. AI-powered chatbots, like Siri, Alexa, and Google Assistant, are now commonplace, enhancing apps across various sectors such as online shopping, transportation, and food delivery.

To integrate AI into Android app development, developers can leverage various AI frameworks and libraries available specifically for Android. These tools offer pre-trained models and APIs for tasks like image recognition, natural language processing, and speech recognition, making your app smarter and more interactive.

The advent of 5G technology is another pivotal development set to transform the mobile app industry. With a speed 100 times faster than 4G, 5G will significantly enhance app speed and user experience.

The Internet of Things (IoT) is another key player, bringing automation and seamless connectivity across various devices, including smartphones, smartwatches, and TVs. The integration of IoT in mobile apps is expected to boost functionality and pave the way for the development of smart homes and cities.

The rise of mobile commerce (m-commerce) is another trend shaping the future of mobile apps. Mobile wallet apps like Paytm, PayPal, Google Pay, and PhonePe are testament to this trend, offering users a convenient way to make online transactions.

Augmented Reality (AR) and Virtual Reality (VR) technologies are also being utilized across various industries such as education, gaming, entertainment, shopping, healthcare, and travel.

Foldable smartphones are another noteworthy development, with around 19% of Android users and 17% of iOS users expressing interest in these devices.

Beacon technology is being used in sectors like hotels, retail, and healthcare to monitor customer behavior and track product interaction, enhancing the shopping experience.

Cloud-based apps like Salesforce, Google Drive, and Slack are also shaping the future of mobile apps, allowing users to store data in the cloud and save internal device space.

When integrating AI into Android app development, it is crucial to plan and define the specific AI functionalities and features you want to incorporate. This includes selecting the right AI algorithms, models, and frameworks that align with your app’s objectives and requirements.

Ensuring an intuitive and seamless design and user interface (UI) is vital when integrating AI-powered features. Providing clear instructions and feedback to users will enhance the overall user experience.

Considering the performance and efficiency of AI algorithms is also essential. Techniques such as caching, parallel processing, and data compression should be leveraged to ensure AI computations do not impact the app’s performance negatively.

Lastly, continuous testing and evaluation of the AI integration in your Android app are crucial. This includes conducting user testing and gathering feedback to identify and address any issues or areas for improvement.

By keeping pace with these trends, developers can ensure their apps stay relevant and competitive in the dynamic app market. The future of Android app development is set to be shaped by these trends, with AI taking the helm, driving innovation and progress

Conclusion

In conclusion, the integration of Artificial Intelligence (AI) in Android app development has revolutionized the field, enhancing user experiences and app functionality. AI-powered features such as text recognition and face detection have transformed the way users interact with Android apps, offering personalized recommendations, real-time translations, improved security, and more. The impact of AI on Android app development is evident in the diverse range of possibilities it offers, from chatbots and virtual assistants to predictive analytics and emotion recognition. With the projected growth of the mobile app market and the importance of staying competitive in today’s digital landscape, leveraging AI in Android app development is crucial.

Looking ahead, the future of Android app development will be shaped by trends such as 5G technology, Internet of Things (IoT), augmented reality (AR), virtual reality (VR), foldable smartphones, beacon technology, and cloud-based apps. These trends present opportunities for developers to create innovative and immersive experiences for users. However, integrating AI into Android apps requires careful planning and consideration of factors such as selecting the right AI algorithms and frameworks, ensuring intuitive design and user interface (UI), optimizing performance, and continuous testing and evaluation. By staying informed about these trends and leveraging AI capabilities effectively, developers can create Android apps that are at the forefront of innovation.

To stay competitive in today’s digital landscape, start leveraging AI in your Android app development now!